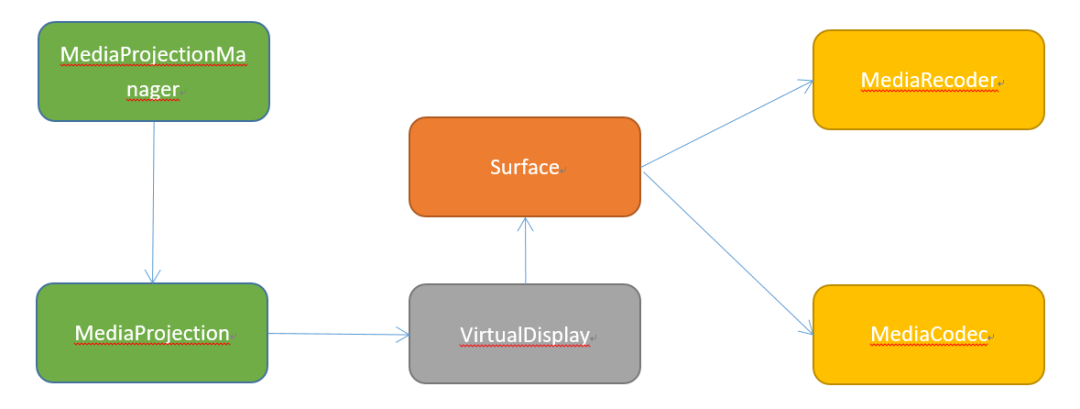

简单架构

App

AndroidManifest.xml

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.MANAGE_MEDIA_PROJECTION" />

<uses-permission android:name="android.permission.CAPTURE_VIDEO_OUTPUT" />

<uses-permission android:name="android.permission.CAPTURE_SECURE_VIDEO_OUTPUT" />

Activity

public class RtcActivity extends Activity {

@Override

public void onCreate(Bundle savedInstanceState) {

MediaProjectionManager mediaProjectionManager =

(MediaProjectionManager) getApplication().getSystemService(

Context.MEDIA_PROJECTION_SERVICE);

startActivityForResult(

mediaProjectionManager.createScreenCaptureIntent(), CAPTURE_PERMISSION_REQUEST_CODE);

}

@Override

public void onActivityResult(int requestCode, int resultCode, Intent data) {

if (requestCode == REQUEST_CODE && resultCode == RESULT_OK) {

mMediaProjection = mMediaProjectionManager.getMediaProjection(resultCode,data);

}

}

}

MediaProjectionManager

public final class MediaProjectionManager {

/** @hide */

public MediaProjectionManager(Context context) {

mContext = context;

IBinder b = ServiceManager.getService(Context.MEDIA_PROJECTION_SERVICE);

mService = IMediaProjectionManager.Stub.asInterface(b);

mCallbacks = new ArrayMap<>();

}

public Intent createScreenCaptureIntent() {

Intent i = new Intent();

i.setClassName("com.android.systemui",

"com.android.systemui.media.MediaProjectionPermissionActivity");

return i;

}

public MediaProjection getMediaProjection(int resultCode, @NonNull Intent resultData) {

if (resultCode != Activity.RESULT_OK || resultData == null) {

return null;

}

IBinder projection = resultData.getIBinderExtra(EXTRA_MEDIA_PROJECTION);

if (projection == null) {

return null;

}

return new MediaProjection(mContext, IMediaProjection.Stub.asInterface(projection));

}

}

MediaProjection

public final class MediaProjection {

/** @hide */

public MediaProjection(Context context, IMediaProjection impl) {

mCallbacks = new ArrayMap<Callback, CallbackRecord>();

mContext = context;

mImpl = impl;

try {

mImpl.start(new MediaProjectionCallback());

} catch (RemoteException e) {

throw new RuntimeException("Failed to start media projection", e);

}

}

/**

* 提供Surface

* @hide

*/

public VirtualDisplay createVirtualDisplay(@NonNull String name,

int width, int height, int dpi, boolean isSecure, @Nullable Surface surface,

@Nullable VirtualDisplay.Callback callback, @Nullable Handler handler) {

DisplayManager dm = (DisplayManager) mContext.getSystemService(Context.DISPLAY_SERVICE);

int flags = isSecure ? DisplayManager.VIRTUAL_DISPLAY_FLAG_SECURE : 0;

return dm.createVirtualDisplay(this, name, width, height, dpi, surface,

flags | DisplayManager.VIRTUAL_DISPLAY_FLAG_AUTO_MIRROR |

DisplayManager.VIRTUAL_DISPLAY_FLAG_PRESENTATION, callback, handler);

}

}

SystemUI

public class MediaProjectionPermissionActivity extends Activity {

private IMediaProjectionManager mService;

@Override

public void onClick(DialogInterface dialog, int which) {

if (which == AlertDialog.BUTTON_POSITIVE) {

setResult(RESULT_OK, getMediaProjectionIntent(

mUid, mPackageName, mPermanentGrant));

}

}

private Intent getMediaProjectionIntent(int uid, String packageName, boolean permanentGrant)

throws RemoteException {

IMediaProjection projection = mService.createProjection(uid, packageName,

MediaProjectionManager.TYPE_SCREEN_CAPTURE, permanentGrant);

Intent intent = new Intent();

intent.putExtra(MediaProjectionManager.EXTRA_MEDIA_PROJECTION, projection.asBinder());

return intent;

}

}

SystemServer

MediaProjectionManagerService

public final class MediaProjectionManagerService extends SystemService

implements Watchdog.Monitor {

@Override

public void onStart() {

publishBinderService(Context.MEDIA_PROJECTION_SERVICE, new BinderService(),

false /*allowIsolated*/);

}

private final class BinderService extends IMediaProjectionManager.Stub {

@Override // Binder call

public IMediaProjection createProjection(int uid, String packageName, int type,

boolean isPermanentGrant) {

if (mContext.checkCallingPermission(Manifest.permission.MANAGE_MEDIA_PROJECTION)

!= PackageManager.PERMISSION_GRANTED) {

throw new SecurityException("Requires MANAGE_MEDIA_PROJECTION in order to grant "

+ "projection permission");

}

if (packageName == null || packageName.isEmpty()) {

throw new IllegalArgumentException("package name must not be empty");

}

long callingToken = Binder.clearCallingIdentity();

MediaProjection projection;

try {

projection = new MediaProjection(type, uid, packageName);

if (isPermanentGrant) {

mAppOps.setMode(AppOpsManager.OP_PROJECT_MEDIA,

projection.uid, projection.packageName, AppOpsManager.MODE_ALLOWED);

}

} finally {

Binder.restoreCallingIdentity(callingToken);

}

return projection;

}

}

private final class MediaProjection extends IMediaProjection.Stub {

public MediaProjection(int type, int uid, String packageName) {

mType = type;

this.uid = uid;

this.packageName = packageName;

userHandle = new UserHandle(UserHandle.getUserId(uid));

}

}

}

录屏到文件—MediaRecoder

MediaRecoder

public class MediaRecorder {

private Surface mSurface;

public native Surface getSurface();

}

App使用

mMediaRecorder = new MediaRecorder();

mMediaRecorder.setVideoSource(MediaRecorder.VideoSource.SURFACE);

mMediaRecorder.setAudioSource(MediaRecorder.AudioSource.MIC);

mMediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4);

mMediaRecorder.setOutputFile(mDstPath);

mMediaRecorder.setVideoSize(mWidth, mHeight);

mMediaRecorder.setVideoFrameRate(FRAME_RATE);

mMediaRecorder.setVideoEncodingBitRate(mBitRate);

mMediaRecorder.setVideoEncoder(MediaRecorder.VideoEncoder.H264);

mMediaRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC);

try {

mMediaRecorder.prepare();

mVirtualDisplay = mMediaProjection.createVirtualDisplay(TAG + "-display", mWidth, mHeight, mDpi, DisplayManager.VIRTUAL_DISPLAY_FLAG_PUBLIC, mMediaRecorder.getSurface(), null, null);

Log.i(TAG, "created virtual display: " + mVirtualDisplay);

mMediaRecorder.start();

Log.i(TAG, "mediarecorder start");

} catch (Exception e) {

e.printStackTrace();

}

Log.i(TAG, "media recorder" + mBitRate + "kps");

录屏直播—MediaCodec or FFmpeg

MediaCodec

final public class MediaCodec {

public native final Surface createInputSurface();

}

App使用

mEncoder = MediaCodec.createEncoderByType(MIME_TYPE);

mEncoder.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mInputSurface = mEncoder.createInputSurface(); //这⾥输出的 Surface 可以输⼊给VirtualDisplay

mEncoder.start();

远程控制—webrtc

PeerConnectionFactory

public class PeerConnectionFactory {

public VideoSource createVideoSource(VideoCapturer capturer) {

org.webrtc.EglBase.Context eglContext = this.localEglbase == null ? null : this.localEglbase.getEglBaseContext();

// Surface create

SurfaceTextureHelper surfaceTextureHelper = SurfaceTextureHelper.create("VideoCapturerThread", eglContext);

long nativeAndroidVideoTrackSource = nativeCreateVideoSource(this.nativeFactory, surfaceTextureHelper, capturer.isScreencast());

CapturerObserver capturerObserver = new AndroidVideoTrackSourceObserver(nativeAndroidVideoTrackSource);

capturer.initialize(surfaceTextureHelper, ContextUtils.getApplicationContext(), capturerObserver);

return new VideoSource(nativeAndroidVideoTrackSource);

}

}

SurfaceTextureHelper

public class SurfaceTextureHelper {

private final SurfaceTexture surfaceTexture;

public static SurfaceTextureHelper create(final String threadName, final Context sharedContext) {

HandlerThread thread = new HandlerThread(threadName);

thread.start();

final Handler handler = new Handler(thread.getLooper());

return (SurfaceTextureHelper)ThreadUtils.invokeAtFrontUninterruptibly(handler, new Callable<SurfaceTextureHelper>() {

public SurfaceTextureHelper call() {

try {

return new SurfaceTextureHelper(sharedContext, handler);

} catch (RuntimeException var2) {

Logging.e("SurfaceTextureHelper", threadName + " create failure", var2);

return null;

}

}

});

}

private SurfaceTextureHelper(Context sharedContext, Handler handler) {

this.hasPendingTexture = false;

this.isTextureInUse = false;

this.isQuitting = false;

this.setListenerRunnable = new Runnable() {

public void run() {

Logging.d("SurfaceTextureHelper", "Setting listener to " + SurfaceTextureHelper.this.pendingListener);

SurfaceTextureHelper.this.listener = SurfaceTextureHelper.this.pendingListener;

SurfaceTextureHelper.this.pendingListener = null;

if (SurfaceTextureHelper.this.hasPendingTexture) {

SurfaceTextureHelper.this.updateTexImage();

SurfaceTextureHelper.this.hasPendingTexture = false;

}

}

};

if (handler.getLooper().getThread() != Thread.currentThread()) {

throw new IllegalStateException("SurfaceTextureHelper must be created on the handler thread");

} else {

this.handler = handler;

this.eglBase = EglBase.create(sharedContext, EglBase.CONFIG_PIXEL_BUFFER);

try {

this.eglBase.createDummyPbufferSurface();

this.eglBase.makeCurrent();

} catch (RuntimeException var4) {

this.eglBase.release();

handler.getLooper().quit();

throw var4;

}

this.oesTextureId = GlUtil.generateTexture(36197);

// 重点

this.surfaceTexture = new SurfaceTexture(this.oesTextureId);

this.surfaceTexture.setOnFrameAvailableListener(new OnFrameAvailableListener() {

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

SurfaceTextureHelper.this.hasPendingTexture = true;

SurfaceTextureHelper.this.tryDeliverTextureFrame();

}

});

}

}

}

ScreenCapturerAndroid

public class ScreenCapturerAndroid implements VideoCapturer, OnTextureFrameAvailableListener {

public ScreenCapturerAndroid(Intent mediaProjectionPermissionResultData, Callback mediaProjectionCallback) {

this.mediaProjectionPermissionResultData = mediaProjectionPermissionResultData;

this.mediaProjectionCallback = mediaProjectionCallback;

}

public synchronized void initialize(SurfaceTextureHelper surfaceTextureHelper, Context applicationContext, CapturerObserver capturerObserver) {

this.checkNotDisposed();

if (capturerObserver == null) {

throw new RuntimeException("capturerObserver not set.");

} else {

this.capturerObserver = capturerObserver;

if (surfaceTextureHelper == null) {

throw new RuntimeException("surfaceTextureHelper not set.");

} else {

this.surfaceTextureHelper = surfaceTextureHelper;

this.mediaProjectionManager = (MediaProjectionManager)applicationContext.getSystemService("media_projection");

}

}

}

public synchronized void startCapture(int width, int height, int ignoredFramerate) {

this.checkNotDisposed();

this.width = width;

this.height = height;

this.mediaProjection = this.mediaProjectionManager.getMediaProjection(-1, this.mediaProjectionPermissionResultData);

this.mediaProjection.registerCallback(this.mediaProjectionCallback, this.surfaceTextureHelper.getHandler());

this.createVirtualDisplay();

this.capturerObserver.onCapturerStarted(true);

this.surfaceTextureHelper.startListening(this);

}

private void createVirtualDisplay() {

this.surfaceTextureHelper.getSurfaceTexture().setDefaultBufferSize(this.width, this.height);

this.virtualDisplay = this.mediaProjection.createVirtualDisplay("WebRTC_ScreenCapture", this.width, this.height, 400, 3, new Surface(this.surfaceTextureHelper.getSurfaceTexture()), (android.hardware.display.VirtualDisplay.Callback)null, (Handler)null);

}

}

App使用

VideoCapturer videoCapturer = new ScreenCapturerAndroid(

mMediaProjectionPermissionResultData, new MediaProjection.Callback() {

@Override

public void onStop() {

report("User revoked permission to capture the screen.");

}

});

mVideoSource = factory.createVideoSource(videoCapturer);

videoCapturer.startCapture(mPeerConnParams.videoWidth, mPeerConnParams.videoHeight, mPeerConnParams.videoFps);